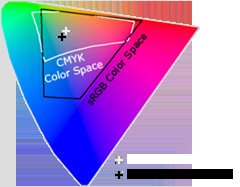

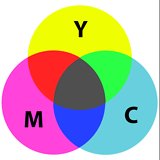

The covered range of colors (called gamut) is different between color spaces because these ranges are then discretized, "digitized", represented on a fixed amount of bits, and then reproduced on a monitor, in print, etc. One wants to store as much color information as possible in a certain amount of bytes.

Now, if you have an equipment which can only emit in CMYK, e.g. a printer, obviously you do not want to store color values that are way off gamut, as some information is lost. You could store CMYK images in ProPhoto or Lab, using 32 bit color representations, on the other hand, you are wasting storage space.

Imagine that you can only display 16 colors with your video card (like old days EGA). How would you choose those 16 colors? Obviously you would check what colors the monitor can display, and then select 16 systematically to cover the largest gamut with that 16 colors. Then you would use four bits to represent each color.

Now, if you know that your monitor can only display that 16 color, you would not store your AdobeRGB, 14-bit RAW images, you would convert those images to the 4-bit-color gamut and store a much smaller file.

Another way to describe this: maybe you work with ProPhoto images but these will be viewed with average TFT monitors. The gamut of the monitor is far less than the ProPhoto gamut. Again, there is no use to transfer and display ProPhoto images on TFT monitors, so you will convert these to smaller images that still cover the entire gamut of TFT monitors. (You will want to work with the largest gamut possible though to avoid artifacts during editing.)

So to sum this up: you want to cover the largest amount of reproduceable colors with the smallest amount of bits, and each color space is a compromise around this.